Research overview

My research is rooted in the mathematical foundations of data science, with primary focuses on theory and algorithms for large-scale optimization problems from engineering and data sciences and machine learning for graphical data. Most of my research is in one of the following directions.

First-order methods for large-scale systems

I design, analyze and implement proximal first-order methods for large-scale optimization problems.Nonlinear and nonconvex optimization with benign structure

I try to identify interesting problem and data structures and design efficient algorithms for these problems.Stochastic constrained optimization

For smooth nonlinear optimization problems, I study stochastic-gradient-based algorithms that can handle deterministic constraints.Distributed and decentralized optimization

I study topology design in decentralized optimization and manage the trade-off between communication costs and convergence rate.Machine learning for graphical data

I aim to build data-centric and robust graph representation models, especially with fairness and privacy concerns.

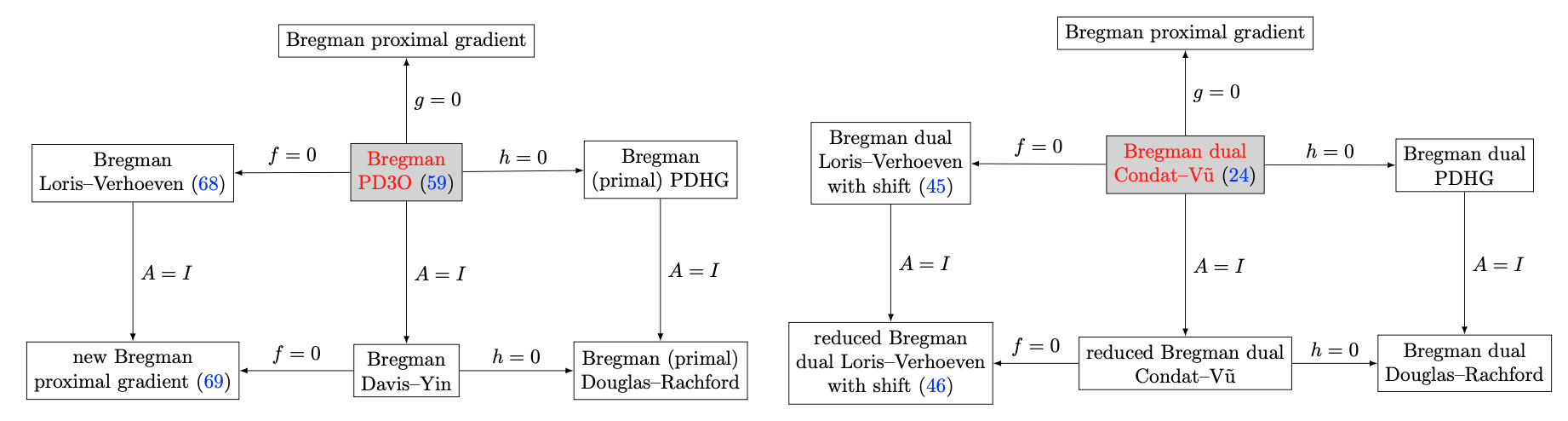

Proximal methods with Bregman distances

Proximal methods have become a standard tool for solving nonsmooth, constrained, large-scale or distributed optimization probelms. To further improve the efficiency in computation of proximal operators, I am particularly interested in generalized proximal operators based on Bregman distances. Carefully designed Bregman proximal operators can better match the structure of the problem, thus improving the convergence rate of the proximal algorithms. A Bregman proximal operator can also be easier to compute than its Euclidean counterpart, thereby reducing the per-iteration complexity of a proximal algorithm.

|

Selected publications

Bregman three-operator splitting methods

X. Jiang, and L. Vandenberghe, 2023Bregman primal-dual first-order method and application to sparse semidefinite programming

X. Jiang, and L. Vandenberghe, 2022

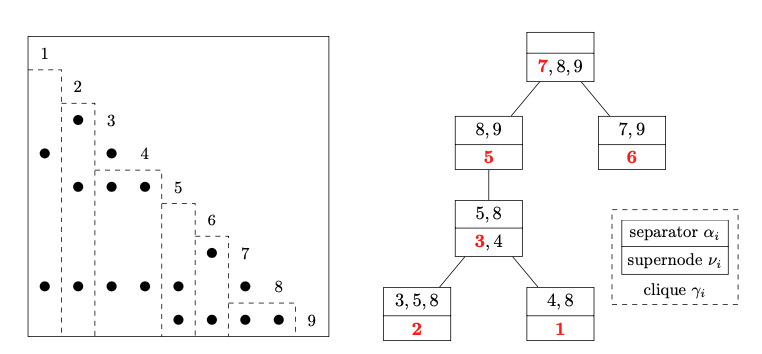

Structured nonlinear and nonconvex optimization

Exploiting problem and data structure is important in both convex and nonconvex optimization. Convex conic optimization, for example, is the basis for general-purpose solvers as well as an important modeling tool for various applications. On the other hand, for certain nonconvex optimization problems, specific problem structure could be leveraged to find a global optimum. My research in this direction exploits interesting structures in the positive semidefinite (PSD) matrix cone, the monotone cone, difference-of-convex (DC) programming, etc.

|

Selected publications:

A globally convergent difference-of-convex algorithmic framework and application to log-determinant optimization problems

C. Yao, and X. Jiang, 2023Minimum-rank positive semidefinite matrix completion with chordal patterns and applications to semidefinite relaxations

X. Jiang, Y. Sun, M. S. Andersen, and L. Vandenberghe, 2023Bregman primal-dual first-order method and application to sparse semidefinite programming

X. Jiang, and L. Vandenberghe, 2022

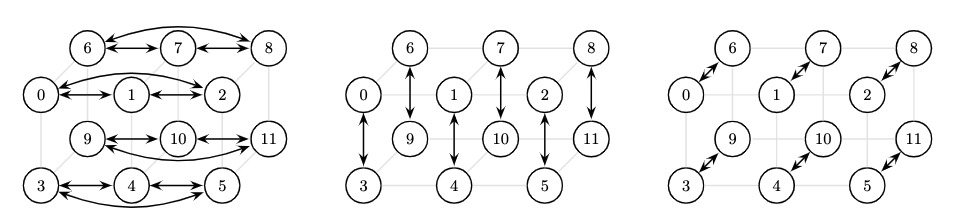

Distributed and decentralized optimization

Distributed and decentralized methods allow computational agents to communicate over a network and to collaboratively solve an optimization problem. This area has received increasing attention, partially due to the recent interests in federated learning with privacy concerns. My research involves the design and analysis of distributed algorithms as well as the network topology in decentralized optimization.

|

Selected publications:

Sparse factorization of the square all-ones matrix of arbitrary order

X. Jiang, E. D. H. Nguyen, C. A. Uribe, and B. Ying, 2024On graphs with finite-time consensus and their use in gradient tracking

E. D. H. Nguyen, X. Jiang, B. Ying, and C. A. Uribe, 2023

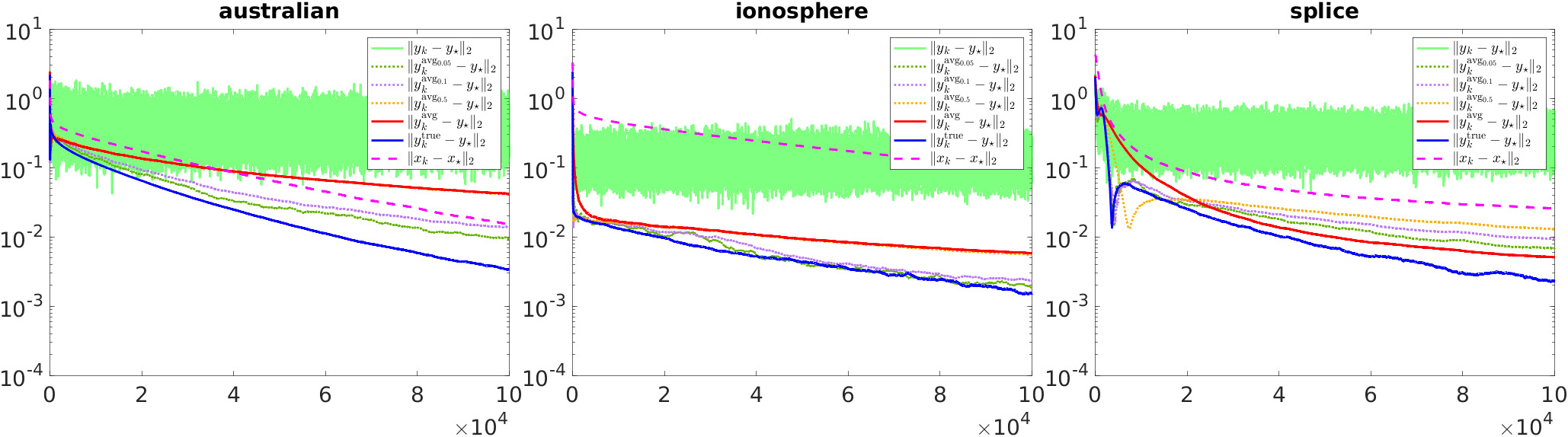

Stochastic constrained optimization

My research focuses on the design, analysis and implementation of efficient and reliable algorithms for solving large-scale nonlinear optimization problems, especially when only stochastic estimates of objective gradients (rather than true gradients) are accessible.

|

Selected publications:

Almost-sure convergence of iterates and multipliers in stochastic sequential quadratic optimization

F. E. Curtis, X. Jiang, and Q. Wang, 2023

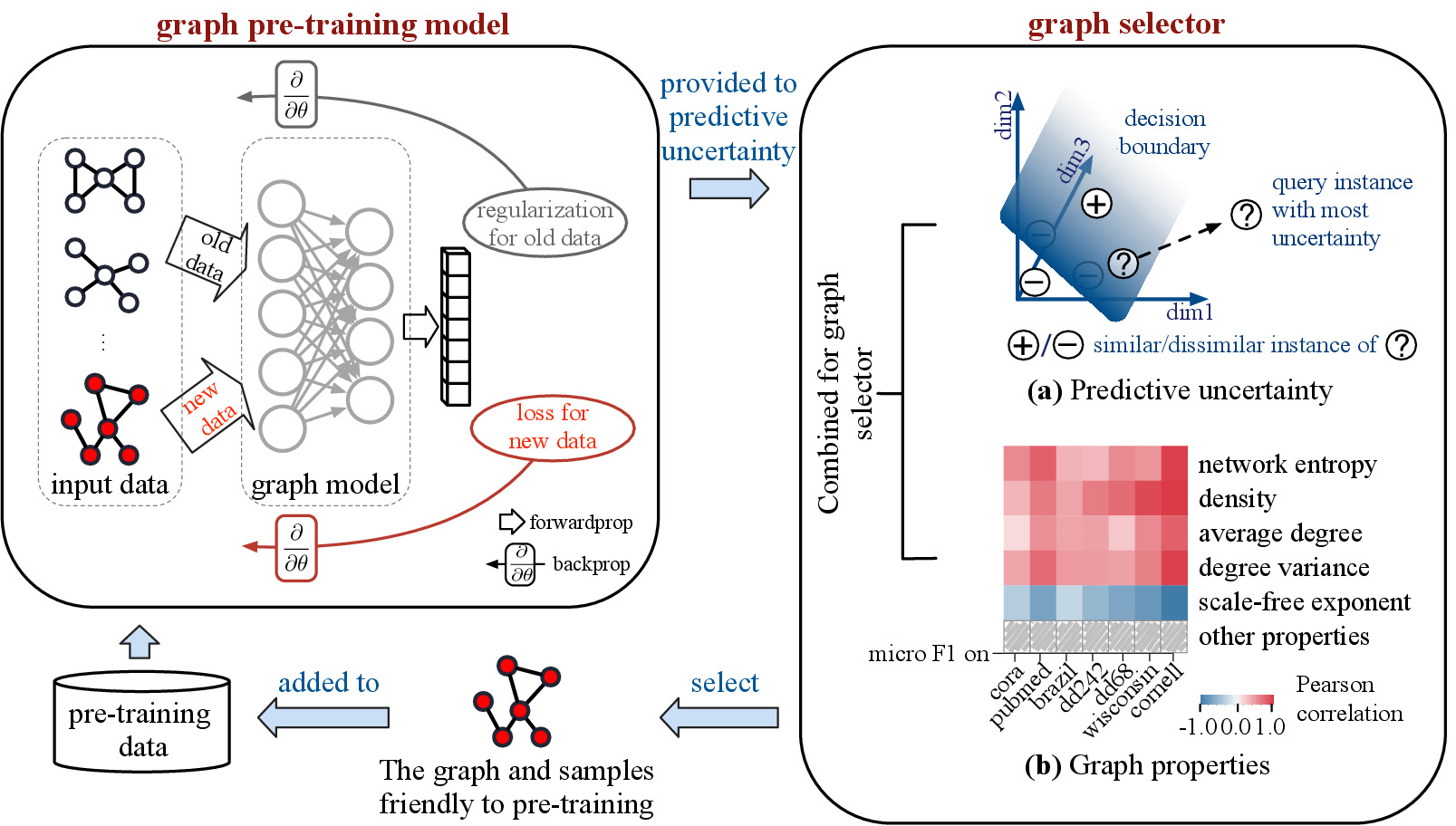

Machine learning for graphical data

My research in this direction aims at building a data-centric, scalable, and robust graph representation model. My recent research interests involve graph representation models with fairness and privacy concerns.

|

Selected publications:

Better with less: A data-active perspective on pre-training graph neural networks [NeurIPS’23]

J. Xu, R. Huang, X. Jiang, Y. Cao, C. Yang, C. Wang, and Y. YangBlindfolded attackers still threatening: Strict black-box adversarial attacks on graphs [AAAI’22]

J. Xu, Y. Sun, X. Jiang, Y. Wang, C. Wang, J. Lu, and Y. YangUnsupervised adversarially robust representation learning on graphs [AAAI’22]

J. Xu, Y. Yang, J. Chen, X. Jiang, C. Wang, J. Lu, and Y. Yang